Union Action Across Borders

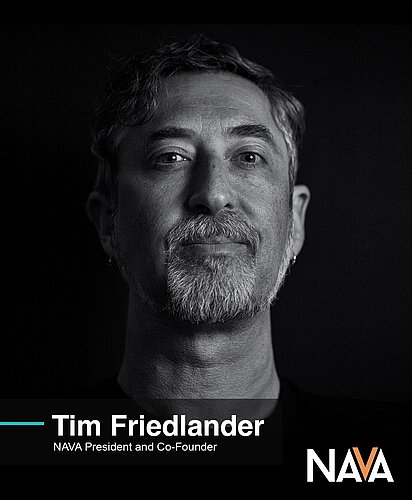

Unions are playing an increasing role in negotiating AI usage in contracts, focusing on the issues of consent, control and compensation. As the technology continues to advance, it brings into question the rights around character simulation and digital double rights. This was one of the contributing factors to the SAG-AFTRA actors' strikes that we saw in 2023 and beyond. Zeke Alton, an actor and volunteer negotiator for SAG-AFTRA, shared insight into the union's efforts: "Before the TV/theatrical negotiations, SAG-AFTRA was engaged in commercial negotiations where they tried to bargain the use of performer services for AI replication, digital double rights. As this technology hit the forefront of the news, we then saw strikes in TV, film and interactive contracts. Since then, we've been working in partnership and bargaining to work our way through and come up with proper consent, compensation and transparency.”

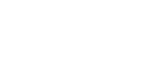

While much of the highly publicised union activity around AI in voice acting has been concentrated in the US through SAG-AFTRA, Europe has seen its own reaction, most notably through PASAVE and United Voice Artists (UVA). PASAVE (Plataforma de Asociaciones y Sindicatos de Artistas de Voz de España) emerged in Spain in response to the growing use of AI in dubbing and voiceover. As Tim Friedlander, President and Co-Founder of the National Association of Voice Actors (NAVA) detailed, "PASAVE launched specifically to address AI and became a central voice in the European conversation. The group initially proposed a single AI clause.” But what began as a unified front soon evolved in its complexity. Tim explained, "That single clause now turned into four variations, depending on whether companies accepted it outright, modified it or rejected it entirely.”